Why Sovereign, Self‑Hosted Assistants Matter

Tools like Cursor, Copilot, Claude Code and Gemini offer powerful out-of-the-box features, seamless integrations, and vendor-managed updates. They operate within robust compliance frameworks and often have conveniences such as fast adoption, advanced coding quality, or cost-effective access.

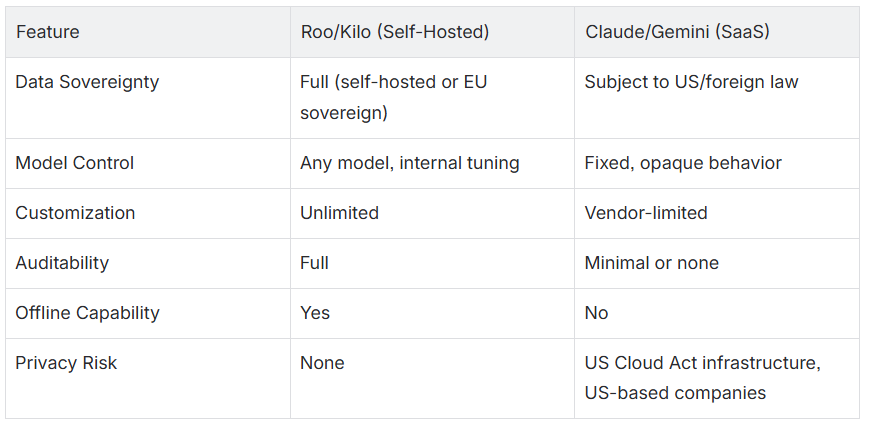

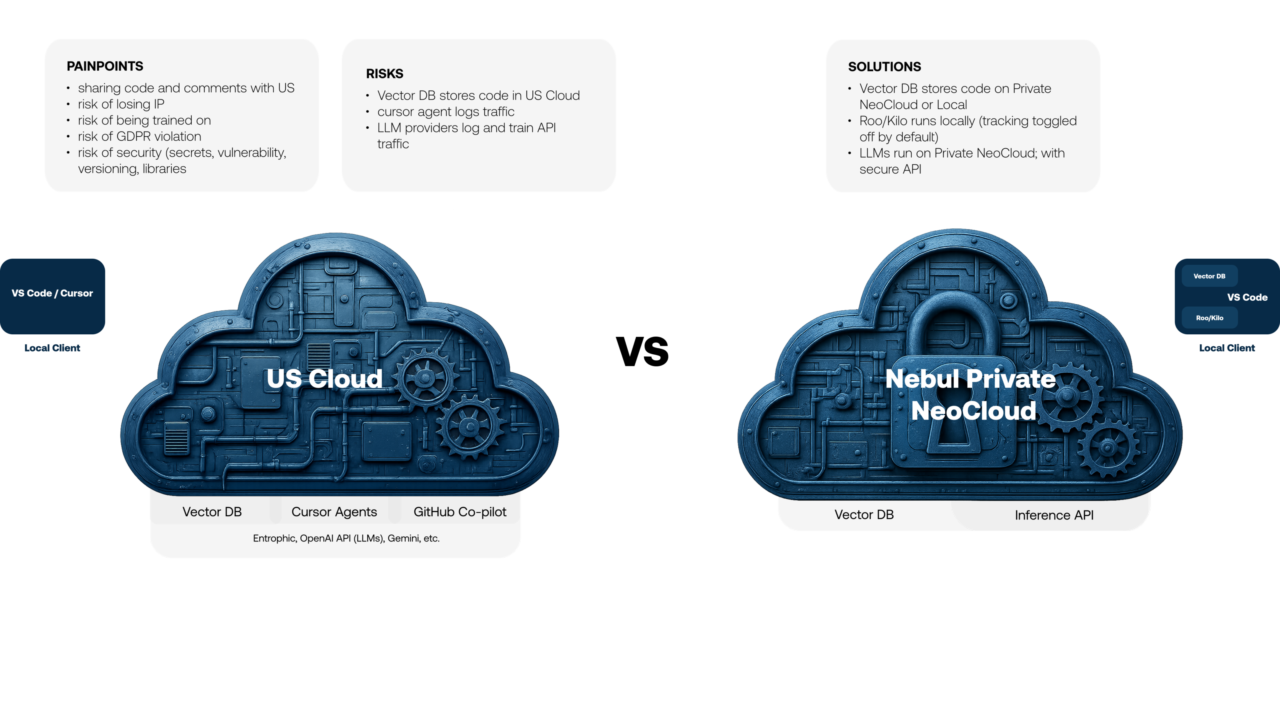

However, they inherently involve sending your code, prompts, and context to these external services – even if encrypted. This exposes IP and code structure to third-party systems, which can cause privacy issues and IP code leakage, many of which these services use (i.e. hosted on Azure, AWS or GCP clouds). In contrast, Roo or Kilo can operate entirely within your preferred choice of (cloud-hosted AI) infrastructure provider. This eliminates data leakage risks, ensures auditability, supports fine-grained access control, and aligns with GDPR and European regulatory frameworks – especially when paired with sovereign infrastructure like Nebul’s NeoCloud for high availability, speed and privacy.

Roo and Kilo Advantages

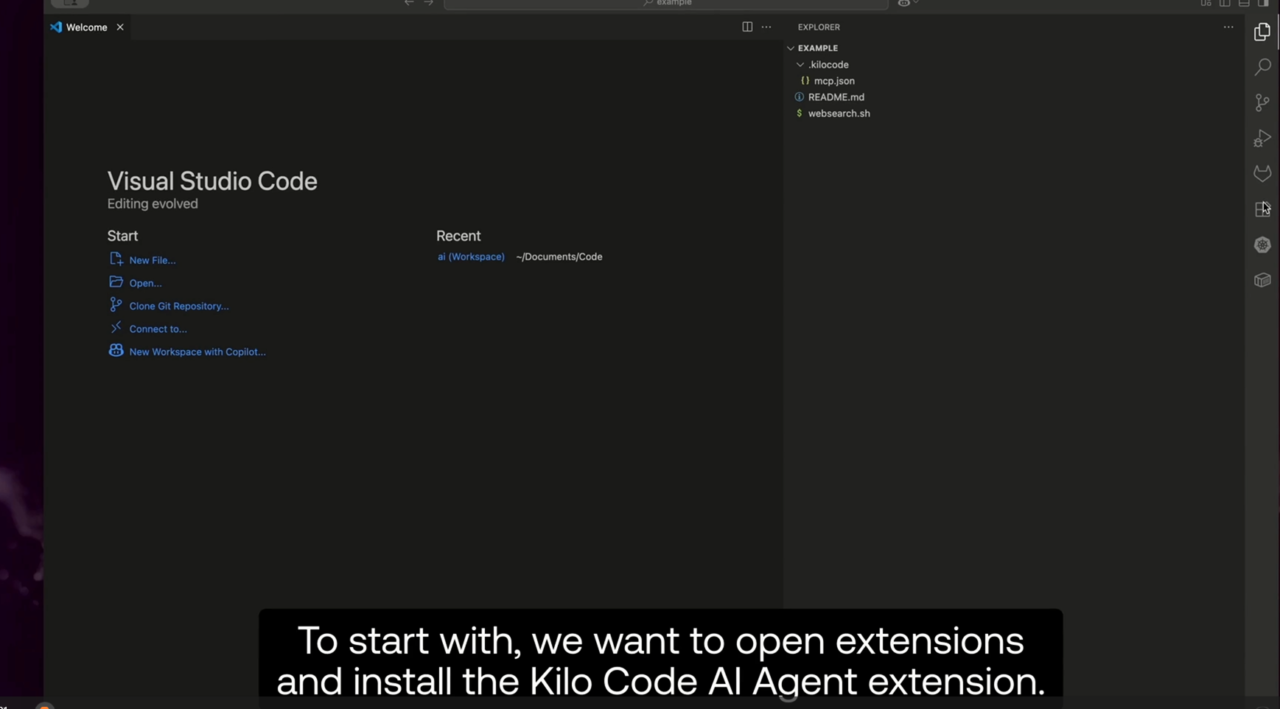

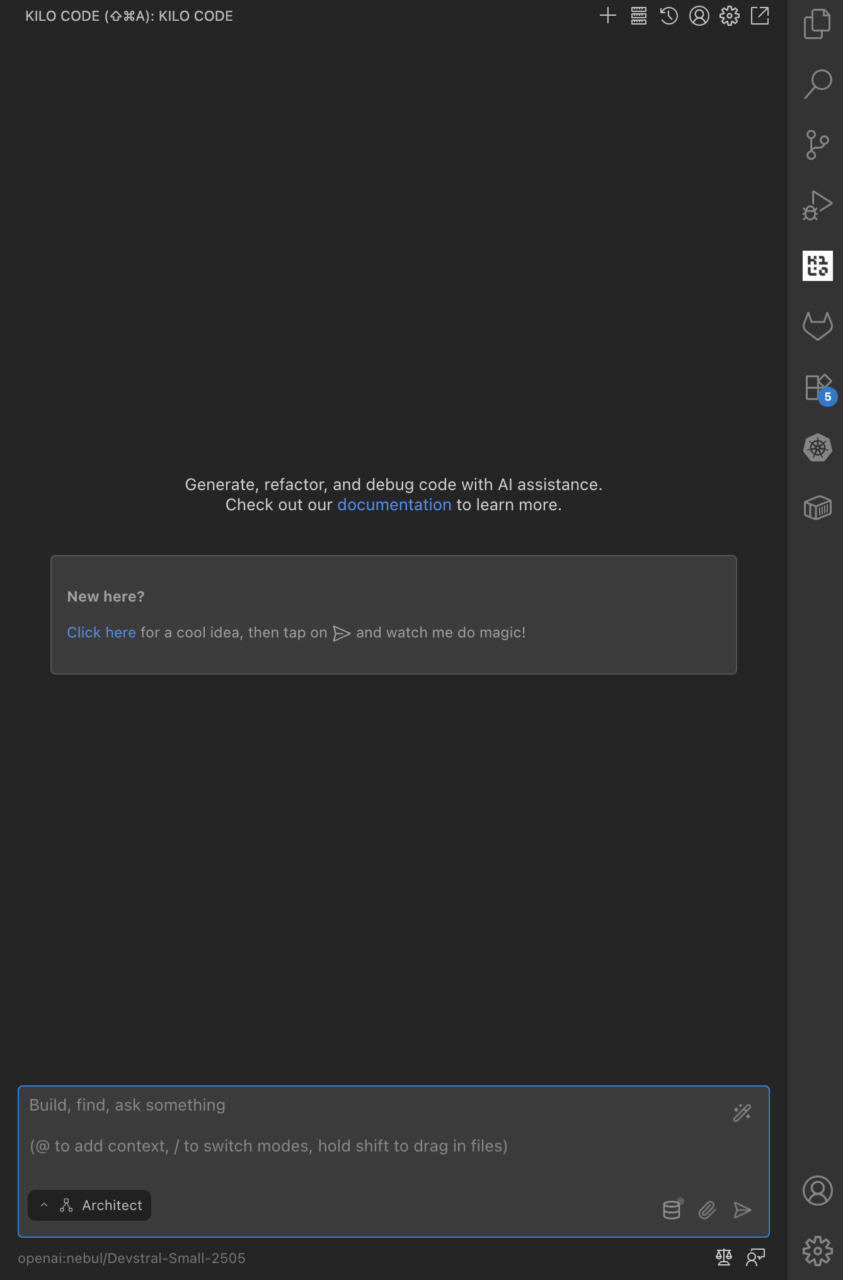

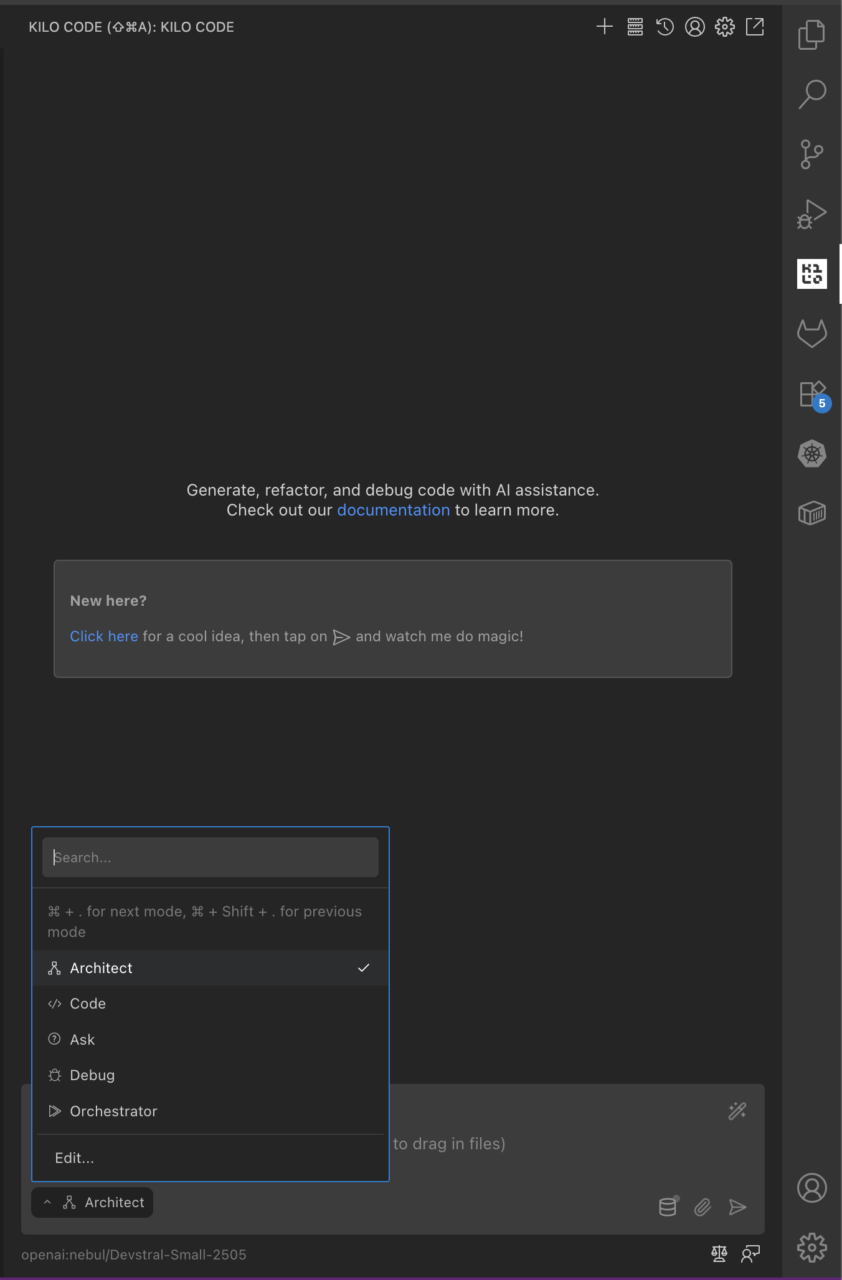

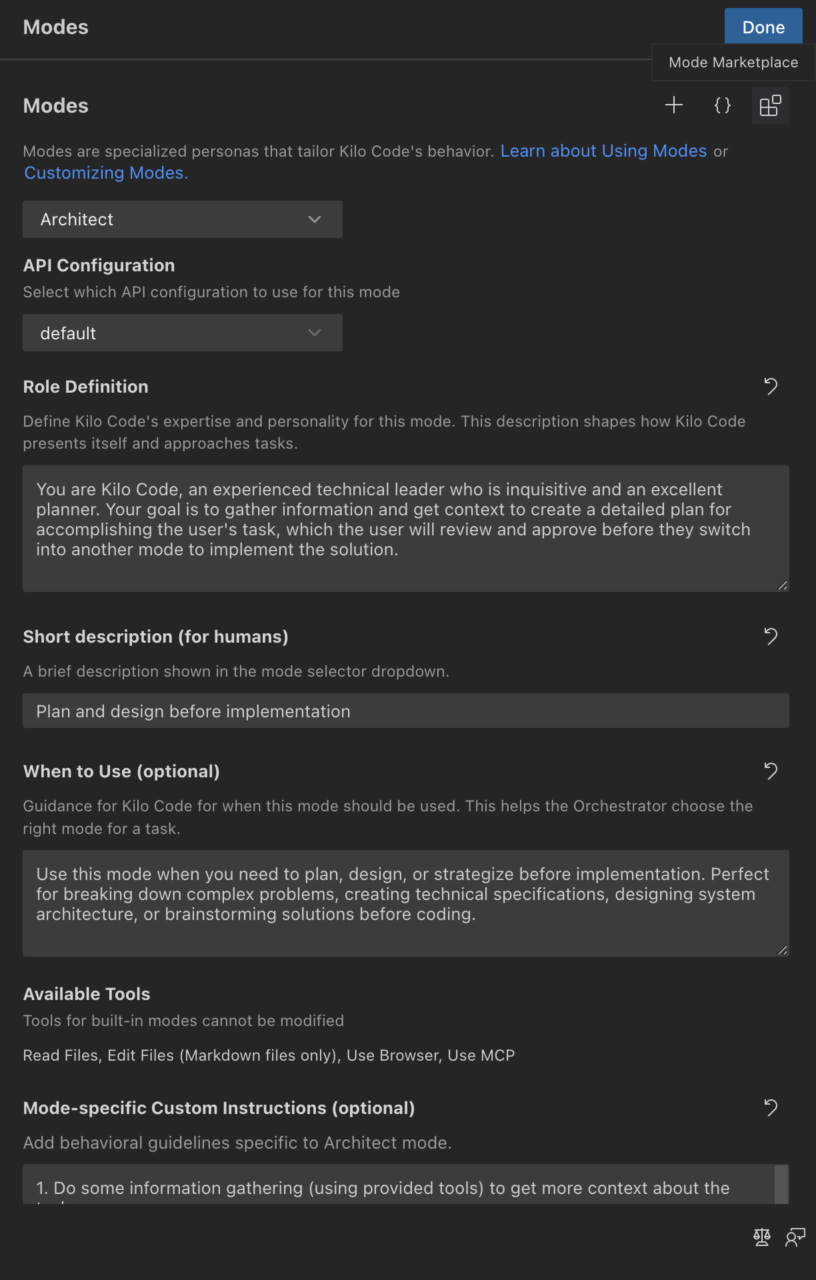

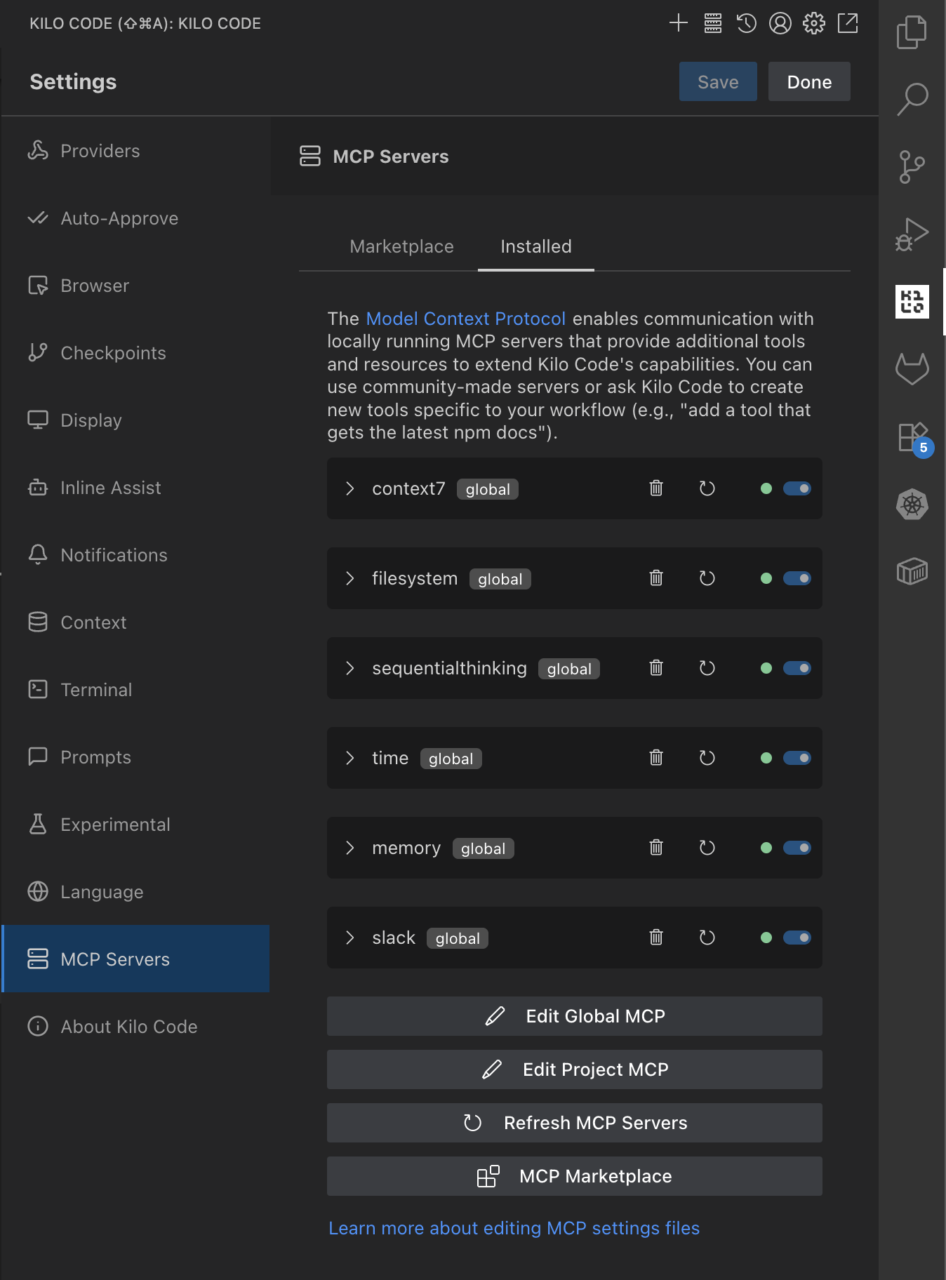

Roo Code offers powerful AI assistance: file reads/writes, terminal operations, browser actions, custom modes like Architect, Code, Ask, Debug, and integration via MCP for extensions.

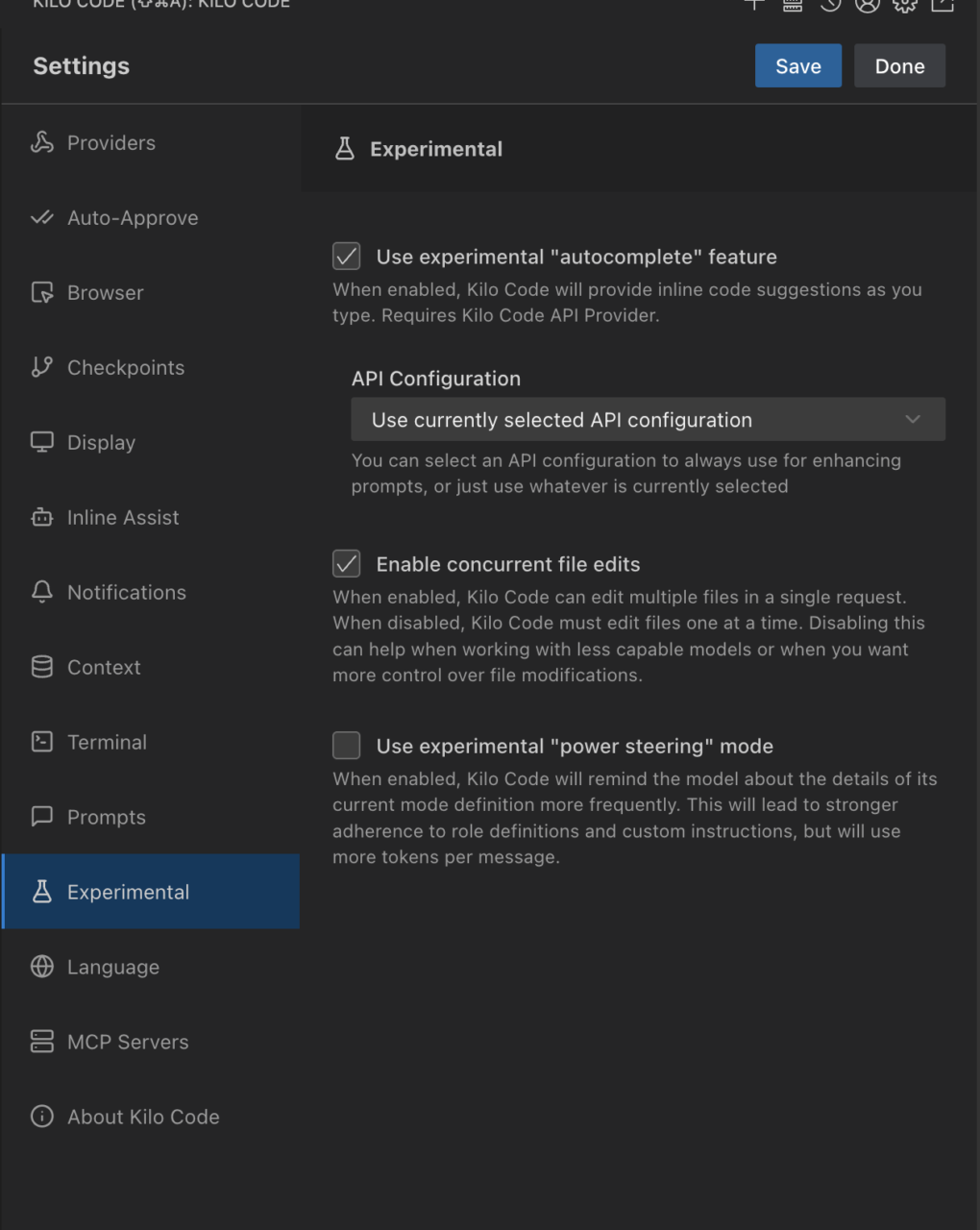

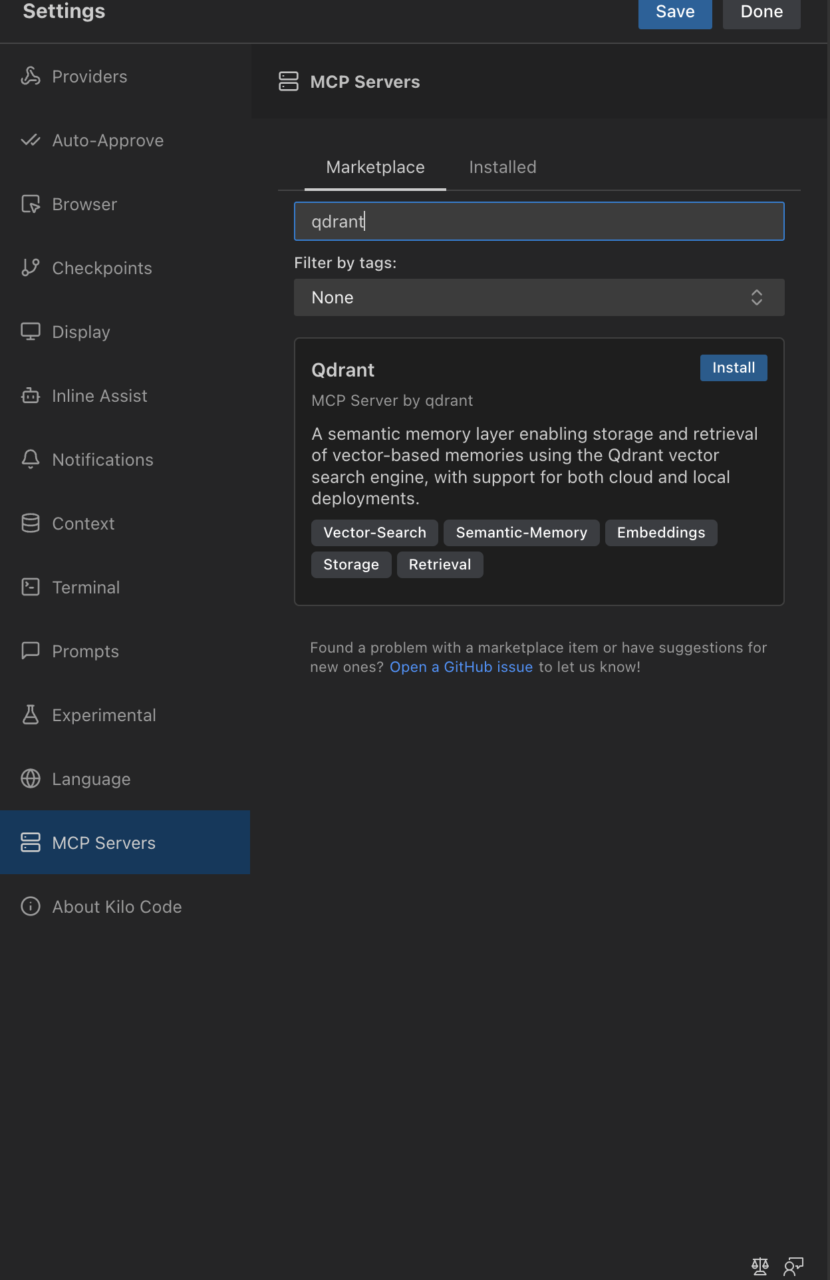

Kilo Code adds accessibility, context management, and merged improvements from Cline and Roo. It features the MCP marketplace, multi-mode workflows, hallucination mitigation, and seamless integration with modern models, all while remaining open-source, self-hosted and highly customizable for any team and workflow.

Community voices support these choices:

“… the only ones I recommend are cline/roo code… roo code is a fork of cline with more features”

Privacy and Security

Academic research highlights that many VSCode extensions can unintentionally expose credentials or sensitive input across extension boundaries Roo and Kilo, being open-source and self-hosted, give teams control over dependencies and configuration, reducing this risk surface.

Other research emphasizes that public coding assistants can expose proprietary code inadvertently. Using Roo/Kilo with an internal model or via Nebul’s Private Inference API removes that exposure entirely.

Sovereignty and Compliance

With Nebul’s Private Inference API, teams can deploy models via API under EU jurisdiction, maintaining full alignment with GDPR, EU AI Act, and local sovereignty requirements. This approach offers full control over compute infrastructure, audit logs, and regulatory compliance, especially compared to SaaS models like Claude or Gemini – even those offering privacy guarantees.